Our node network distributes work— just make a regular API request

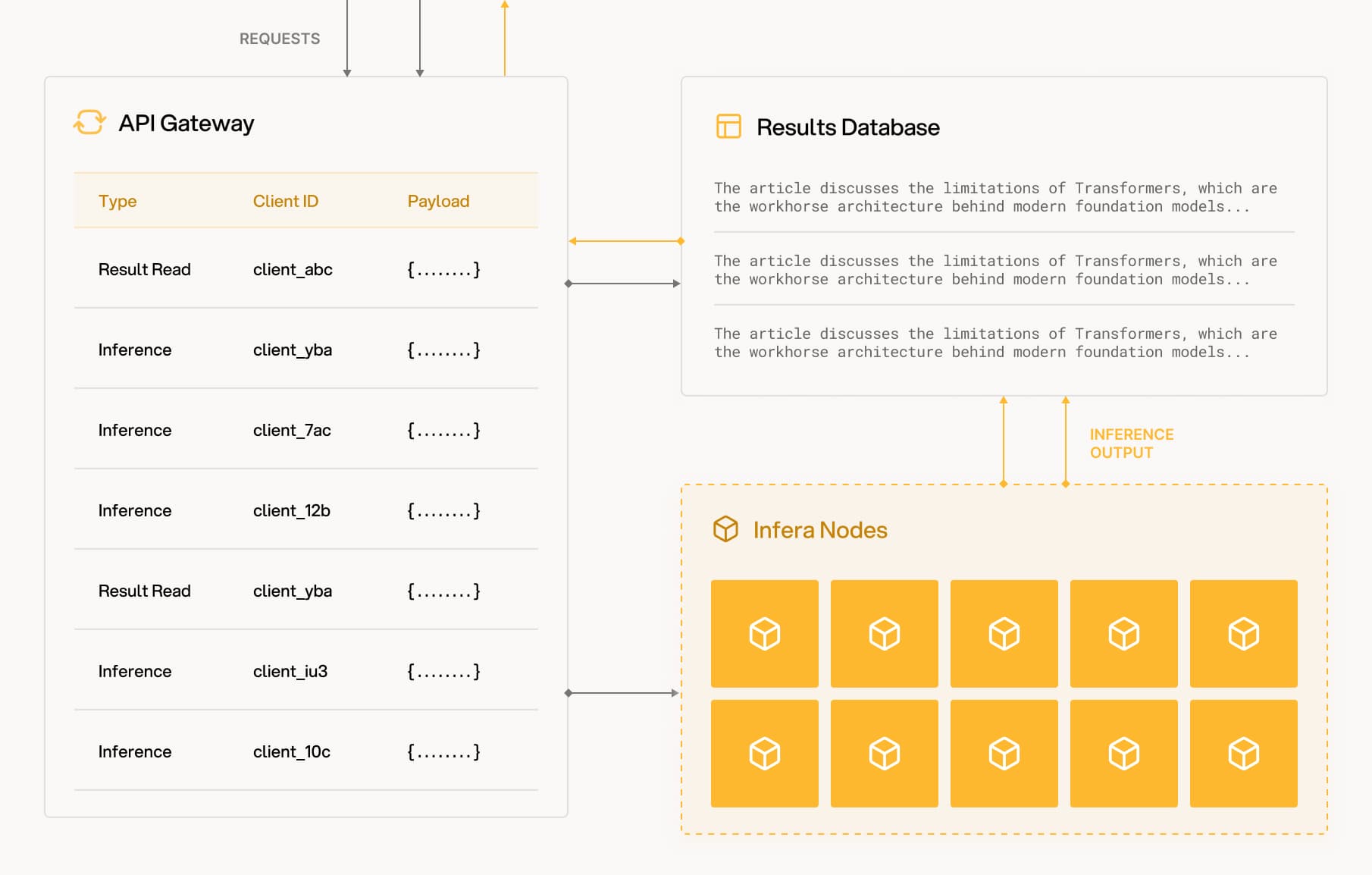

The API Gateway receives inference and read requests from API users

The API Gateway balances the requests and distributes them to Infera nodes

Nodes perform inference and verify the responses among themselves

The results are passed to results database for storage and retrieval

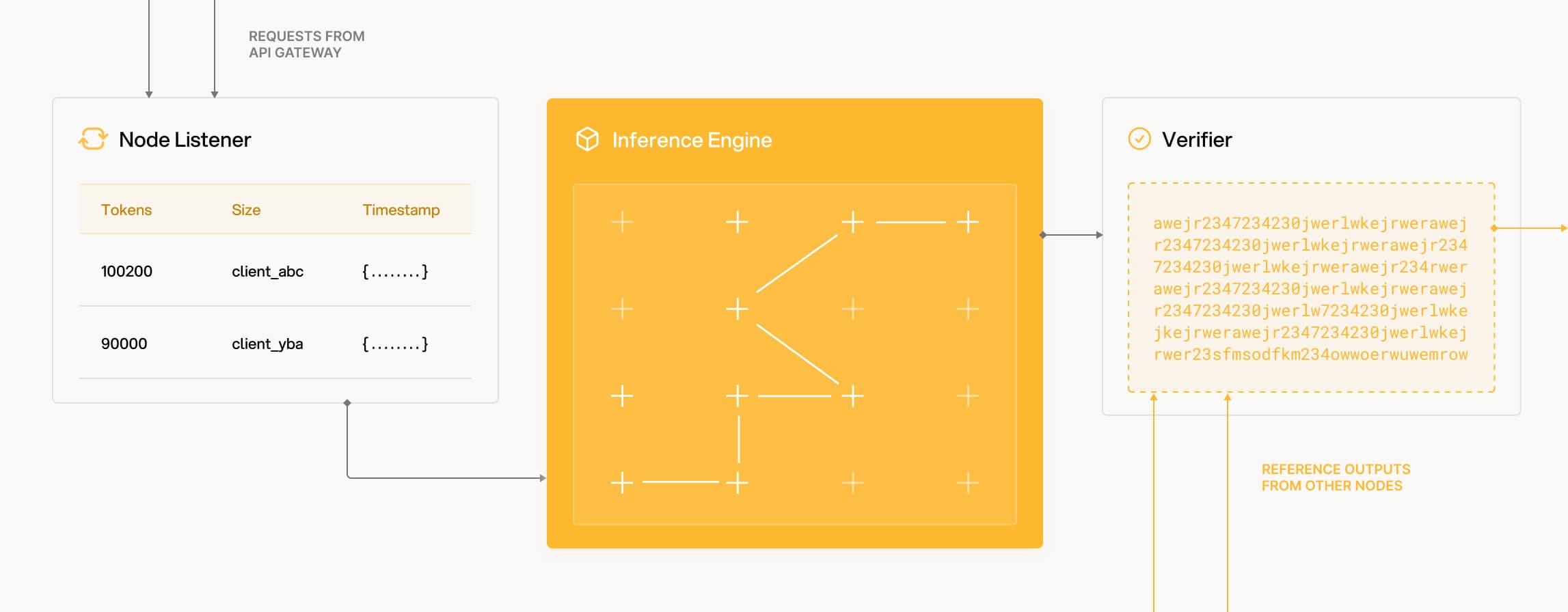

Nodes accept and complete inference requests which are verified by other nodes.

Nodes listen for inference requests broadcasted from the load balancer

When a job is received, the input is passed to the nodes inference engine

Post-inference, the results are verified with similar outputs from reference nodes

Results are then routed back to the load balancer along side the verification results

Becoming a node runner is as easy — be earning rewards in just five minutes

1. Install the Infera App

Install our desktop app and web extension to turn your computer into an Infera node to contribute compute.

2. Run our Node

When you toggle the app, your computer will start performing tasks in the background.

3. Start earning

Using our desktop app or extension, you will be able to claim your INFER token rewards.